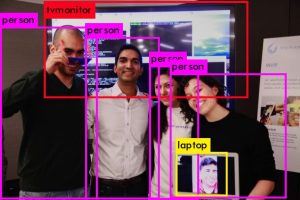

INVIP is an electronic travel aid for visually impaired individuals to understand their surroundings easily and safely move around indoor locations.

We are a group of passionate engineers, psychologists, and developers hoping to help the visually impaired lead a more independent life. Nicolas Metallo, Brenda Truong, and Nuvina Padukka are Executive Management of Technology (eMOT) students at the Tandon School of Engineering, Isabel Izquierdo is doing an MPH at Columbia University and is graduating this year and Edson Tapia has an MS in Psychology from the University of Barcelona and is currently working as a professor at the University of Guadalajara.

More than 120,000 people in New York City are visually impaired. Each day they struggle to travel or commute to places, get injured because of obstacles in their way that they can’t detect with their white cane and are frustrated to regularly arrive late to destinations. In addition, they also feel limited when they can’t read a sign or a document and feel embarrassed to ask for help in some sensitive or private situations.

That's why we are working on a wearable voice assistant that provides object recognition for visually impaired individuals who want to be more independent in their daily activities. In other words, we want to give "eyes" to the blind.

In order to do that, we needed to understand the problem and pain points first. So, our starting point was within our own team, with a friend of ours, Edson. He's a psychologist, university professor and loves sports. But he's also visually impaired. With his deep understanding of the challenges than a visually impaired person (VIP) faces every day we started to draft what INVIP should do.

But we didn't stop there; we "got out of the building" to look for feedback on our assumptions and hypotheses. We talked with institutions that work with the blind community (view map) and started doing interviews with users. We confirmed that among the most urgent challenges for VIP are knowing what obstacles are in front of them, especially for things above the waist, and to be able to read signs and documents independently. We also learned that VIP are very social, they want to have an assistive technology that helps them but they don't want to be completely dependent on it. Interacting with people is essential.

But we didn't stop there; we "got out of the building" to look for feedback on our assumptions and hypotheses. We talked with institutions that work with the blind community (view map) and started doing interviews with users. We confirmed that among the most urgent challenges for VIP are knowing what obstacles are in front of them, especially for things above the waist, and to be able to read signs and documents independently. We also learned that VIP are very social, they want to have an assistive technology that helps them but they don't want to be completely dependent on it. Interacting with people is essential.

On the technical side, we are currently researching different possibilities. Many users have expressed that they would prefer to interact by voice with their devices and that speed, reliability and data privacy are important to them. We are testing a mobile app along with a wearable device that either work in the cloud (Microsoft/Google APIs) or stand-alone (processing done by embedded electronics).

On the technical side, we are currently researching different possibilities. Many users have expressed that they would prefer to interact by voice with their devices and that speed, reliability and data privacy are important to them. We are testing a mobile app along with a wearable device that either work in the cloud (Microsoft/Google APIs) or stand-alone (processing done by embedded electronics).

Thanks to the opportunity and support that NYU has provided us with the Prototyping Fund, we are able to look for a successful product-market fit and test our assumptions and hypotheses with users. Our goal is to develop an MVP that we can present to potential investors and provides a measurable social impact to our users.